22 minutes

Securing AKS Clusters and Applications

Welcome to this new post where we will be discussing Azure Kubernetes service clusters and workloads security.

Before we get started, a huge thank you to Joe, my former colleague from Ireland @wedoazure and his fellow MVP Thomas @tamstar1234 for launching this year’s Azure Spring Clean, alongside all the other people contributing to this community-driven Event!

This is a comprehensive look at the end to end best practices to increase the security posture of your Kubernetes/containerized apps from the developement stages throughout workload runtime while laying down a solid foundation for secure AKS environments!

In addition to this post, a Video was made to walk you through everything covered in this post, please go check it out

Intro

Microsoft’s Azure Kubernetes Service is one of the most popular managed Kubernetes platforms. As it is the case for any Kubernetes environment, securing AKS clusters is the responsability of the Azure customer.

Kubernetes ecosystem’s Security best practices apply to all Clusters regardless where they are hosted, be them on Prem or in the Public Cloud. However, AKS has some platform specific requirements that the Operators and Devolopers must consider to ensure that their Clusters and workloads are protected from malicious attacks and breaches.

Security 101

Before diving in the different options and tools we have at our disposal to augment the security posture of our containers platforms, I will briefley introduce some key concepts.

Kubernetes security is most effective when it is comprehensive and starts from the early phases of building container images, pushing them to centralised image Repositories where they are scanned for vulnerabilities and compliance issues, to running them in your cluster.

Therefore, securing your Kubernetes environments transends beyond clusters and the workloads they are running. It falls in a wider DevSecOps practice where all the components used to deliver containerized applications need to be secured and locked down:

- CI/CD pipelines

- Container images build and ship

- Clusters design and configuration

- Nodes and Pods Networking

- Applications design and build

- Containers Runtine

Engage Everyone in the security process

Security is the concern and responsability of everyone in the organisation! Each individual and team working together to deliver infrastructures and containerized applications should adopt security-minded practices.

Devs should make sure that the container base images they are using to build their applications on are secure and compliant. we will see later on what this means and will take a look at some of the tools and actions they can leverage to achive this.

Ops need to ensure that they build and operate AKS clusters following the best practices we will cover in the next section.

SecOps will contribute by defining policies and enforcing them using the tools and options best suited for containers, we will have a look at these in detail in a bit!

Minimise the Attack Surface

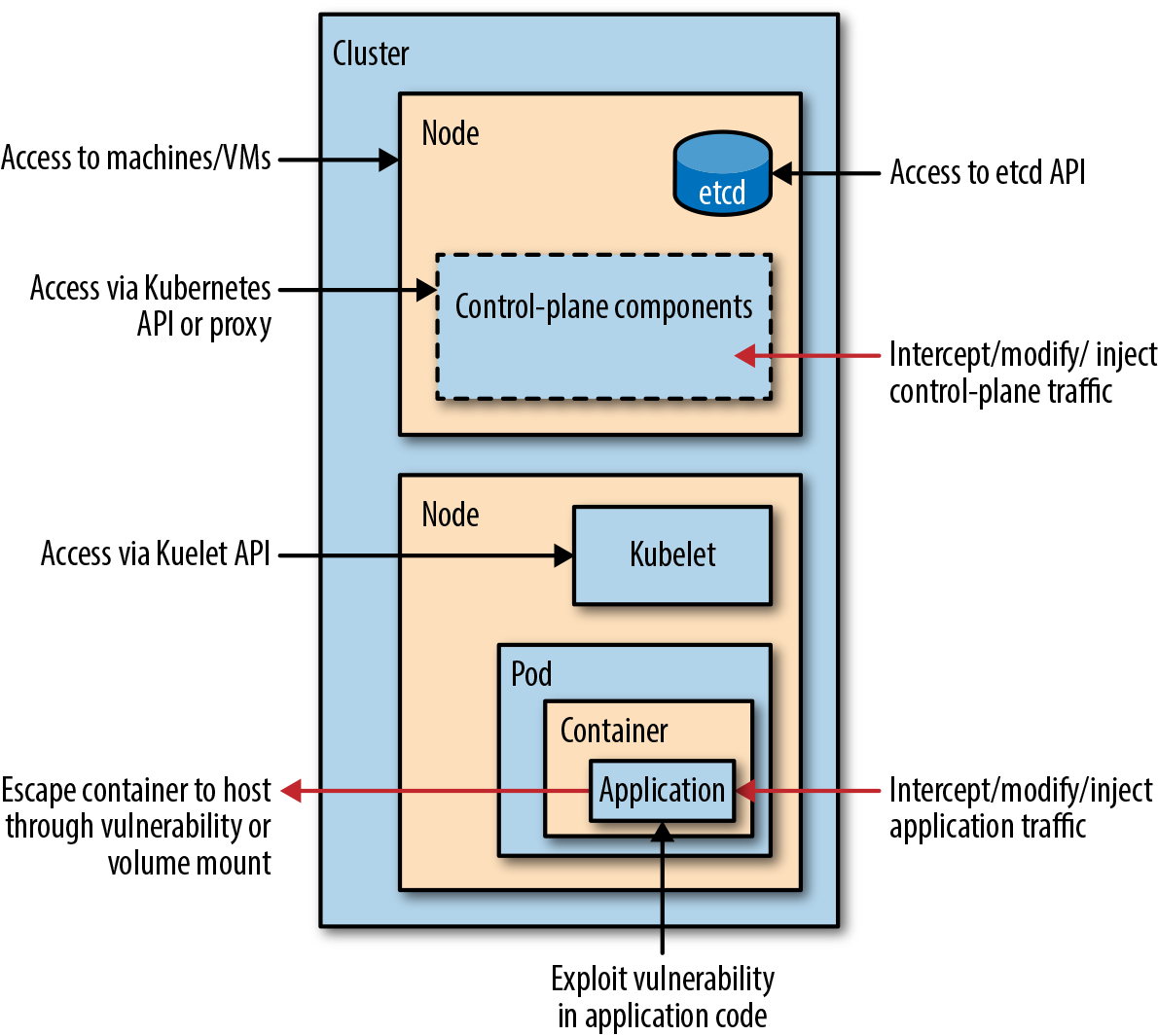

Another important aspect of securing the k8s cluster in particular, is to minimise its attack surface

The attack surface is the set of all possible ways the cluster can be attacked from. The more complex the system, the bigger the attack surface, and therefore the more likely it is that an attacker will find a way in.

in the following diagram, you can see the diffrenet k8s components that are vulnerable to attacks and therefore need to be protected with the appropriate tools and security option

- Worker nodes/VMs

- K8s API

- ETCD

- Kubelet API

- The containers used for applications through vulnerabilities or mounted volumes

- The application code running within the appplications / containers thorugh culnerability exploits

Implement Layered Defense (Security in Depth)

To achieve this, you need to implement or apply controls, configurations, and best practices to mitigate all these potential modes of attack. And therefore adopt the apporach of security in depth which inplies having several layers of defense against attacks on your Kubernetes cluster. If you’re relying on a single defensive measure, attackers might find their way around it.

Through out this video you will see how you can do this for each specific component

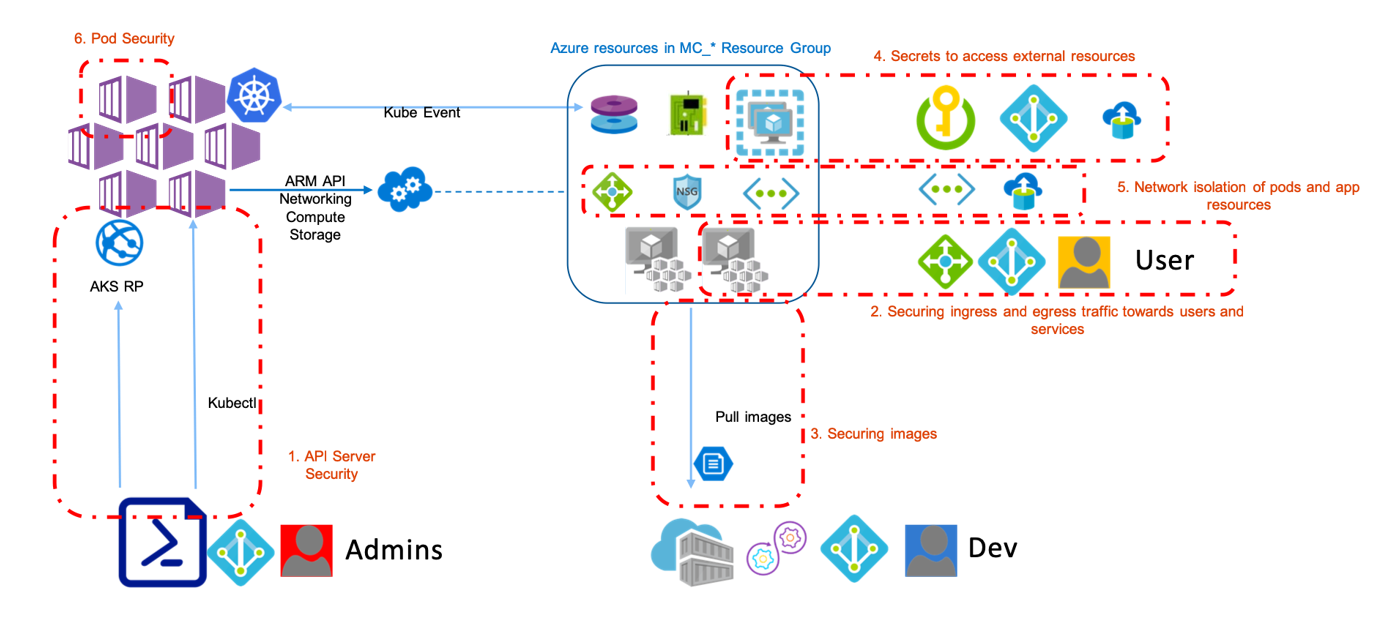

The following diagram depicts the kind of layered defense mechanisms you would be using

- Securing the api server authentication/ authorization

- Securing ingress and egress traffic from and to your cluster

- Securing container images

- Securing secrets and connection strings in your applications

- Securing network isolation of pods and app resources

- Securing pods and pod capabilities

AKS Security in action

Secure the Cluster

Making AKS clusters more secure requires their design to be baked in security. Understanding the fundamentals of Kubernetes security and specific AKS security options before creating clusters will make it easier to secure and manage them.

Some of the critical AKS security features can only be enabled at cluster creation phase. In the case of existing clusters initially created without those features, it is highly recommended to build new clusters and migrate existing workloads into them.

Consistent configurations across all clusters will also make them easier to manage and prevent issues stemming from an incorrect assumption that all clusters have the same protections. In particular, clusters used for different application lifecycles, like development and staging, should have the same security settings as production. Identical environments allow for testing the security posture of a cluster and its workload before promotion to a production environment. This practice also helps to ensure applications running on clusters with those settings will still function as expected by the time they are deployed to production.

it is a Best Practice to automate the creation of AKS clusters, thus ensuring consistent configuration across all clusters amd the same level of protection. Building all the clusters in Dev, Staging and Production with the same security settings will help achieve the following:

- Test the security posture of a cluster and its workload before moving to production environment as they are executed on identical environments

- Ensure applications running on clusters with those settings will still function as expected by the time they are deployed to production.

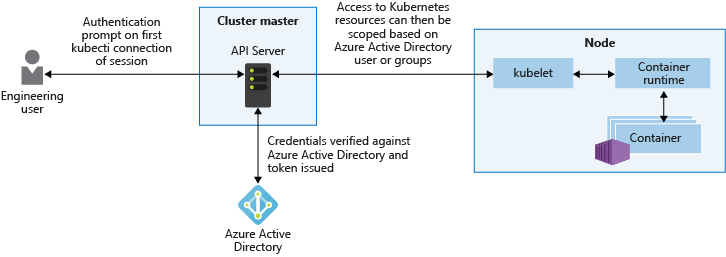

Enable Kubernetes RBAC

Kubernetes Role-Based Access Control allows controlling authorization for a cluster’s Kubernetes API, this applies to users and to workloads in the cluster. AKS offers the option of integrating Kubernetes RBAC with Azure Active Directory, which can be enabled at any time for a cluster.

With Azure AD-integrated clusters in AKS, you use your existing user and group accounts to authenticate users to the API server.

Note: Azure AD AKS inegration can only be enabled when you create a new, RBAC-enabled cluster. You can’t enable Azure AD on an existing AKS cluster.

Enable API Server Firewall (–api-server-authorized-ip-ranges)

In Kubernetes, the API server receives requests to perform actions in the cluster such as to create resources or scale the number of nodes. The API server is the central way to interact with and manage a cluster. To improve cluster security and minimize attacks, the API server should only be accessible from a limited set of IP address ranges.

By default your AKS cluster’s API server is exposed on a public IP with no restrictions. To add a layer of filtering (until AKS Private Clusters go GA), use API server authorized IP address ranges to limit which IP addresses and CIDRs can access the control plane. These IP address ranges are usually addresses used by your on-premises networks or public IPs.

az aks create \

--......\

--api-server-authorized-ip-ranges a.b.c.d/24,x.y.z.t/28

Note: This feature only works for new AKS clusters that you create.

Block Pod Access to Host/VM Instance Metadata

The Azure VM instance metadata endpoint, when accessed from an Azure VM, returns a great deal of information about the VM’s configuration, including, depending on the VM and cluster configuration, Azure Active Directory tokens. This endpoint is accessible by any AKS container on the node by default. Most workloads will not need this information and having access to that information can carry substantial risks.

To disable this access, add a network policy in all user namespaces to block pod egress to the metadata endpoint.

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: block-node-metadata

spec:

podSelector:

matchLabels: {}

policyTypes:

- Egress

egress:

- to:

- ipBlock:

cidr: 0.0.0.0/0 # Preferably something smaller here

except:

- 169.254.169.254/32

Increase Node security

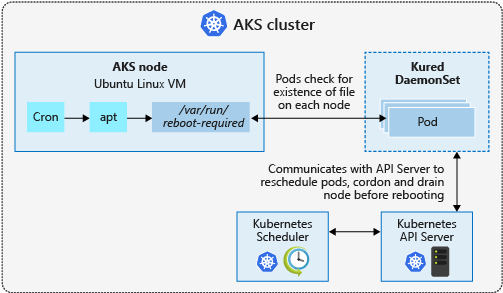

AKS nodes are Azure virtual machines that you manage and maintain.

The Azure platform automatically applies OS security patches to Linux nodes on a nightly basis. If a Linux OS security update requires a host reboot, that reboot is not automatically performed. You can manually reboot the Linux nodes, or a common approach is to use Kured, an open-source reboot daemon for Kubernetes. Kured runs as a DaemonSet and monitors each node for the presence of a file indicating that a reboot is required. Reboots are managed across the cluster using the same cordon and drain process as a cluster upgrade.

Check this Page for more information

Turn on Audit Logging

AKS control plane audit logging is still in Preview. Turning it on will help you:

- Keep a chronological record of calls that have been made to the Kubernetes API server

- Audit logging to investigate suspicious API requests

- These log entries will have a status message “Forbidden.” Authorization failures could mean that an attacker is trying to abuse stolen credentials

- Collect statistics

- Create monitoring alerts for unwanted API calls.

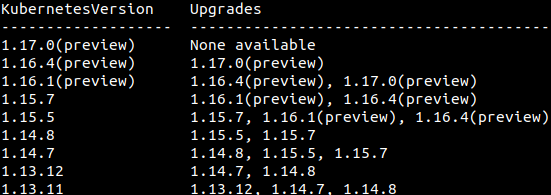

Upgrade AKS Clusters

It is a best practice to continuely upgrade your AKS clusters to the latest AKS’s Not-in-Preview Kubernetes version. This will ensure that you get the latest security patches, features and updates.

Azure provides tools to orchestrate the upgrade of an AKS cluster and components. This upgrade orchestration includes both the Kubernetes master and agent components. To start the upgrade process, you specify one of these available versions. Azure then safely cordons and drains each AKS node and performs the upgrade.

Cordon and drain

During the upgrade process, AKS nodes are individually cordoned from the cluster so new pods aren’t scheduled on them. The nodes are then drained and upgraded as follows:

- A new node is deployed into the node pool. This node runs the latest OS image and patches.

- One of the existing nodes is identified for upgrade. Pods on this node are gracefully terminated and scheduled on the other nodes in the node pool.

- This existing node is deleted from the AKS cluster.

- The next node in the cluster is cordoned and drained using the same process until all nodes are successfully replaced as part of the upgrade process. For more information, see Upgrade an AKS cluster.

Disposable Infrastructure

Another great way of performing Cluster upgrades (the one I prefer) is to build a new one and move the old cluster workloads to it then direct users to the new Cluster.

This excercise will be beneficial for the following:

- Integrate new features to your clusters (especially those that can only be enabled at cluster creation)

- Validate that your Workloads’s code and configuration is compatible with the new K8s version and remediate if needed.

- Build solid and continuously improved IaC, Infrastrucrture as Code, Pipelines

- Test and improve those pipelines on a regular basis

- Build confidence in your Workload deployment pipelines

Manage Kubernetes Secrets

There are two options to store and secure secrets/connection strings in AKS.

- Using the default mechanism for configuring and mainting secrets via the secret object model

- Not Keeping secret information in your cluster but in Azure Keyvault

Using Auzre Key Vault

As of today, there isn’t a native AKS integration with Azure Key Vault. However, the Azure Team has been developing Key Vault FlexVolume which provide this seamless integration

With Key Vault, you store and regularly rotate secrets such as credentials, storage account keys, or certificates. If you integrate Azure Key Vault with an AKS cluster using a FlexVolume. The FlexVolume driver lets the AKS cluster natively retrieve credentials from Key Vault and securely provide them only to the requesting pod. You can use a pod managed identity to request access to Key Vault and retrieve the credentials you need through the FlexVolume driver.

Azure Key Vault with FlexVol is intended for use with applications and services running on Linux pods and nodes.

Secure the container images

After discussing the measures and options which help make your Clutsers more secure, Let’s see how to secure the container images that will be used on these clusters.

Build Secure Images

Kubernetes workloads are built around container images. Ensuring that those images are difficult to exploit and are kept free of security vulnerabilities should be a cornerstone of your AKS security strategy.

Following few best practices for building secure container images will help :

- Minimize the exploitability of running containers and simplify both security updates and scanning.

- Images containing only the files required for the application’s runtime make it much more difficult for malicious attacks to compromise or exploit the containers in your cluster.These images are better off the following

You can achieve this by following the steps bellow:

- Use a minimal, secure base image. Google’s “Distroless” images are a good choice because they do not install OS package managers or shells.

- Only install tools needed for the container’s application. Debugging tools should be omitted from production containers. You can levergarge Multi-stage Docker build

- Instead of putting exploitable tools like curl in your image for long-running applications, if you only need network tools at pod start-time, consider using separate init containers or delivering the data using a more Kubernetes-native method, such as ConfigMaps.

- If you do need to install additional OS packages, remove the package manager from the image in a later build step.

- Keep your images up-to-date. This practice includes watching for new versions of both the base image and any third-party tools you install.

Secure the container runtime

As you develop and run applications in AKS, the security of your pods is a key consideration. Your applications should be designed for the principle of least number of privileges required. You don’t want credentials like database connection strings, keys, or secrets and certificates exposed to the outside world where an attacker could take advantage of those secrets for malicious purposes.

You should not add them to your code or embed them in your container images. Doing this would create a risk for exposure and limit the ability to rotate those credentials as the container images will need to be rebuilt.

We will go through the different options bellow, but you can refer to this Best Practices article to learn how to secure Pods in AKS.

Secure Pod access to resources

An other way of attacking the cluster wuould be to break out of the container and try to get access to the host itself to raad data off other containers or the configuration of the kubelt in order to further attack the cluster and get escalated priviledges..

You can mitigate this by leveraging the following common security context definitions:

- allowPrivilegeEscalation defines if the pod can assume root privileges. Design your applications so this setting is always set to false.

- Linux capabilities let the pod access underlying node processes. Take care with assigning these capabilities. Assign the least number of privileges needed. For more information, see Linux capabilities.

- SELinux labels is a Linux kernel security module that lets you define access policies for services, processes, and filesystem access. Again, assign the least number of privileges needed. For more information, see SELinux options in Kubernetes

Example:

apiVersion: v1

kind: Pod

metadata:

name: security-context-demo

spec:

containers:

- name: security-context-demo

image: nginx:1.15.5

securityContext:

runAsUser: 1000

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

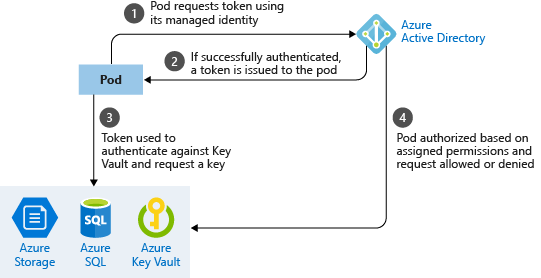

Use AAD Pod Identity

A managed identity for Azure resources lets a pod authenticate itself against Azure services that support it, such as Storage or SQL. The pod is assigned an Azure Identity that lets them authenticate to Azure Active Directory and receive a digital token. This digital token can be presented to other Azure services that check if the pod is authorized to access the service and perform the required actions. This approach means that no secrets are required for database connection strings, for example. The simplified workflow for pod managed identity is shown in the following diagram:

With a managed identity, your application code doesn’t need to include credentials to access a service, such as Azure Storage. As each pod authenticates with its own identity, so you can audit and review access. If your application connects with other Azure services, use managed identities to limit credential reuse and risk of exposure.

For more information about pod identities, see Configure an AKS cluster to use pod managed identities

Use a Containers Security Solution

Deploying a Container Security Solution will help your Organization gain full visibility and security across the entire application lifecycle and infrastructure as well as centrally detect and prevent threats. Such solution would provide the following

- Scan Image Registry for vulnerabilities and compliance

- Integrate with CI/CD Pipelines: Sscan at build

- Provides Container runtime protection and control

- Block non-compliant Pods/Containers

- Provide a centralized Container Security Management

Be sure to evaluate and choose a solution that scans for vulnerabilities in OS packages and in third-party runtime libraries for the programming languages your software uses.

To address CVEs when they are found in your internally maintained images, your organization should have a policy for updating and replacing images known to have serious, fixable vulnerabilities for images that are already deployed. Image scanning should be part of your CI/CD pipeline process and images with high-severity, fixable CVEs should generate an alert and fail a build.

If you also deploy third-party container images in your cluster, scan those as well. If those images have serious fixable vulnerabilities that do not seem to get addressed by the maintainer, you should consider creating your own images for those tools.

Secure the Network

Limit Node SSH Access

By default, the SSH port on the nodes is open to all pods running in the cluster. Preventing direct SSH access from the pod network to the nodes helps limit the potential blast radius of damage if a container in a pod becomes compromised.

You can block pod access to the nodes’ SSH ports using a Kubernetes Network Policy, if enabled in your cluster. However, the Kubernetes Network Policy API does not support cluster-wide egress policies; network policies are namespace-scoped, which requires making sure a policy is added for each namespace, which requires ongoing vigilance.

For clusters using the Calico CNI for network policy, users have another option. Calico supports additional Kubernetes resources beyond the standard Kubernetes network policy types, including GlobalNetworkPolicy, which can apply to the entire cluster.

Restrict Cluster Egress Traffic

By limiting egress network traffic only to known, necessary external endpoints, you can limit the potential for exploitation by compromised workloads in your cluster.

AKS provides several options for controlling cluster egress traffic. They can be used separately or together for better protection.

- Use Kubernetes network policies to limit pod egress endpoints. Policies need to be created for every namespace or workload.

- Use the Calico Network Policy option in AKS, which adds additional resource types to Kubernetes Network Policy, including the non-namespaced GlobalNetworkPolicy.

- Use an Azure firewall to control cluster egress from the VNet. If using this method, note the external endpoints that the cluster’s nodes (not necessarily the workload pods) need to reach for proper functionality and make firewall exceptions as needed.

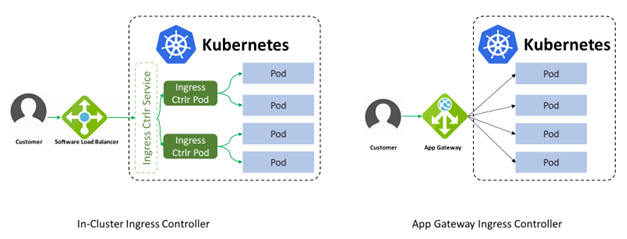

Firewall ingress to apps

Always use a Firewall in front of your AKS Load balancers to filter trafic and protect your applicaitons form known attacks.

Azure Gateway Ingress Controller, which is GA, allows the use of a single Application Gateway Ingress Controller to control multiple AKS clusters. It also helps eliminate the need to have another load balancer/public IP in front of AKS cluster and avoids multiple hops before requests reach the AKS cluster. Application Gateway talks to pods directly using their private IP and does not require NodePort or KubeProxy services. This also increases the deployment’s performance.

Using Azure Application Gateway in addition to AGIC also helps protect your AKS cluster by providing TLS policy and Web Application Firewall (WAF) functionality.

Enable Network Policies

Think of network policies as a firewall within your cluster. They help you control which components can communicate with each other. The principle of least privilege should be applied to how traffic can flow between pods in an AKS Cluster

Azure provides two ways to implement network policy. You choose a network policy option when you create an AKS cluster. The policy option can’t be changed after the cluster is created:

- Azure’s own implementation, called Azure Network Policies.

- Calico Network Policies, an open-source network and network security solution founded by Tigera.

Let’s say we have a microservices app deployed to our cluster and composed of the following components and their roles:

- Frontend

- Serves Web traffic

- Message

- Stores/Lists messages

- User

- Authentication

- Redis

Configuring network policies help you restrict access to only Pods that require it:

- Frontend should not talk directly to Redis

- Frontend is only allowed to communicate with Message ans User

- Messge is only allowed to communicate with Redis

Deploy a Service Mesh

A service mesh provides capabilities like traffic management, resiliency, policy, security, strong identity, and observability to your workloads. Your application is decoupled from these operational capabilities and the service mesh moves them out of the application layer, and down to the infrastructure layer.

There are numerous utilizations of a service mesh, below are the ones specific to securing your workloads

- Encrypt all traffic in cluster - Enable mutual TLS between specified services in the cluster. This can be extended to ingress and egress at the network perimeter. Provides a secure by default option with no changes needed for application code and infrastructure.

- Observability - Gain insight into how your services are connected the traffic that flows between them. Obtain metrics, logs, and traces for all traffic in cluster, and ingress/egress. Add distributed tracing abilities to your applications.

It is worth the effort to evaluate which service mesh offering best meets your organization’s needs. Istio, Linkerd, and Consul can all be deployed to AKS clusters. Service meshes can be quite powerful, but they can also require a great deal of configuration to secure workloads properly.

Consul Connect

Consul is a full-feature service management framework, and the addition of Connect in v1.2 gives it service discovery capabilities which make it a full Service Mesh. Consul is part of HashiCorp’s suite of infrastructure management products; it started as a way to manage services running on Nomad and has grown to support multiple other data center and container management platforms including Kubernetes.

Consul Connect uses an agent installed on every node as a DaemonSet which communicates with the Envoy sidecar proxies that handles routing & forwarding of traffic.

Architecture diagrams and more product information is available at Consul.io.

Istio

Istio is a Kubernetes-native solution that was initially released by Lyft, and a large number of major technology companies have chosen to back it as their service mesh of choice. Google, IBM, and Microsoft rely on Istio as the default service mesh that is offered in their respective Kubernetes cloud services.

Istio has separated its data and control planes by using a sidecar loaded proxy which caches information so that it does not need to go back to the control plane for every call. The control planes are pods that also run in the Kubernetes cluster, allowing for better resilience in the event that there is a failure of a single pod in any part of the service mesh.

Architecture diagrams and more product information is available at Istio.io.

Linkerd

Linkerd is arguably the second most popular service mesh on Kubernetes and, due to its rewrite in v2, its architecture mirrors Istio’s closely, with an initial focus on simplicity instead of flexibility. This fact, along with it being a Kubernetes-only solution, results in fewer moving pieces, which means that Linkerd has less complexity overall. While Linkerd v1.x is still supported, and it supports more container platforms than Kubernetes; new features (like blue/green deployments) are focused on v2. primarily.

Linkerd is unique in that it is part of the Cloud Native Foundation (CNCF), which is the organization responsible for Kubernetes. No other service mesh is backed by an independent foundation.

Architecture diagrams and additional product information is available at Linkerd.io.

Next and Beyond

There are other important Scurity options you should consider to make your AKS more secure. The following are not GA as of yet and therefore are not suited for Production until they become GA. However, you can start testing these features in PoC environments and get ready to roll them out once they are promoted from their Preview state.

Create Private Clusters

Private AKS clusters have all their control plane components, including the cluster’s Kubernetes API service, in a private RFC1918 network space. This limits access and keeps all traffic within Azure’s networks. You can lock down access to the API to specific VNets. Without this feature, the cluster’s API has a public IP address and all traffic to it, including from the cluster’s node pools, goes over public network.

Note that private clusters do have some limitations, and an Azure VM is required to use a bastion VM for connections to the cluster API not originating from the cluster’s node pools. Azure also charges for the Private Endpoint resource needed to make the Kubernetes API available to VNets.

Note: The private cluster option can only be selected at AKS cluster creation time.

Pod Security Policy (PSP)

Natively available on Kubernetes mainstream, currently in preview, Pod Security Policy for AKS enables fine-grained authorization of pod creation and updates. It allows you to set up policies to validate requests to pods and define a set of conditions that a pod must run with in order to be scheduled on the AKS cluster

A Pod Security Policy is a cluster-level resource that controls security sensitive aspects of the pod specification. The PodSecurityPolicy objects define a set of conditions that a pod must run with in order to be accepted into the system, as well as defaults for the related fields. Pod Security Policies address several critical security use cases, including:

- Preventing containers from running with privileged flag - this type of container will have most of the capabilities available to the underlying host.

- Preventing sharing of host PID/IPC namespace, networking, and ports - this step ensures proper isolation between Docker containers and the underlying host.

- Limiting use of volume types - writable hostPath directory volumes, for example, allow containers to write to the filesystem in a manner that allows them to traverse the host filesystem outside the pathPrefix, so readOnly: true must be used.

- Putting limits on host filesystem use.

- Enforcing read only for root file system via the ReadOnlyRootFilesystem.

- Preventing privilege escalation to root privileges.

- Rejecting containers with root privileges.

- Restricting Linux capabilities to bare minimum in adherence with least privilege principles.

Some of these attributes can also be controlled via securityContext. However, it is generally recommended that you shouldn’t customize the pod-level security context but should instead use Pod Security Policies

Azure Policy for AKS

AKS now offers, in Preview, an integration with Azure Policy. Azure Policy for AKS uses the Open Policy Agent (OPA) Gatekeeper admission controller to enforce a variety of best practices by preventing non-conforming objects from getting created in an AKS cluster. While some overlap of Pod Security Policy capabilities exists, OPA allows restrictions not just on pods, but on virtually any attribute of any cluster resource.

AzureContainersdevopsdevsecopsaksdockerKubernetesSecurityAzureSpringCleanmicroservicesservice mesh

4536 Words

2020-02-25 00:00 +0000